- AE Golf Performance Newsletter

- Posts

- Better Use of Data in Golf

Better Use of Data in Golf

Why it pays off to take a breath and ask better questions about your data/tech

The Data & Tech Revolution in Golf

Why it pays off to take a breath and ask better questions

In recent years, I have gradually become more and more involved with the data and tech side of golf (and other sports). This started with my scientific research, where data and measurement tools are a natural part of the job. But since leaving academia, I have been entrenched in the world of sports technology and data on a day-to-day basis, with a greater focus on real-world problem solving and applications.

For example, I left my university job to consult full time with the research & innovation team at Fenris Group, working to develop innovative uses of markerless motion capture and force plates to analyze golf swing biomechanics and broader sports science use-cases.

I am now a full time sports scientist for Exerfly, a company that manufactures high-end flywheel training systems, while also consulting with numerous coaches and facilities to better leverage data/tech. This includes a current position leading the data and sports science efforts at the new Sports Academy PGA Frisco location housed within the PGA of America Coaching Center.

A key lesson that has emerged across these experiences: data and tech have never been more accessible but we also need to develop 1) more effective strategies for vetting out which tools/data are actually useful and 2) strategies for using data/tech more efficiently.

My goal with this post is to get you thinking about basic concepts of data/tech in golf. And if you want to dive deeper or work with me, then feel free to reach out via the form at the end of the post (or directly via Instagram).

What is Data?

I believe many golfers have misplaced views towards what data is an what it is not.

Data is information. This information can be simple or complex. It can come from an expensive piece of technology, or something as simple as asking a golfer how they feel.

When used effectively, that information can give us actionable insights to drive better decision making and problem solving.

But more information is not always better. It could just accumulate, adding little value. Or even worse, poorly measured or interpreted data could mislead you or distract you from what matters.

Many people associate data with technology. But sport technology is often a means of providing data, ideally with the ability to be precise, measure what is normally challenging to measure, and/or streamline the process of gathering information. There are times where low or no-tech options are actually just as good or superior to tech-driven data. For example, subjective measures of sleep quality/recovery often perform as well (or better) than physiological devices when it comes to monitoring an athlete’s recovery or readiness to train (note: cross-referencing both is usually my preferred approach).

Not all tech is equally valid/reliable. Just because something produces data does not mean that data is inherently accurate or useful. Tech is a powerful tool when leveraged correctly, but they also have limitations at times.

With all this in mind, I want to briefly dive into a concept that I posted about awhile back on Twitter/X and Instagram: questions to ask when using data/tech.

Question 1: Is it useful and actionable or just interesting and accessible?

A common issue I see is using data/tech because you can and the data it produces are interesting. But accessible and interesting does not necessarily mean it will be useful and actionable. More is not always better if it distracts you from what matters most or sends you down the wrong path because you do not have a plan for how to use it. At best, I often see this data just accumulating in a database with no clear use.

Question 2: How Noisy is Your Data? Do You Have a Plan to Separate the Signal from the Noise?

The variability of a test is determined by both 1) the actual natural variability you have rep to rep (e.g., you do not always swing exactly the same speed each time) AND 2) any additional noise introduced by the testing method/tool itself. The more total variability, the harder it is to determine if a change was real, or just noise.

For example, if your ball speed values bounce around by ~5% across multiple swings, it can be hard to determine whether a 3% change on any given swing is due to an actual change or just variability.

There are a few things you can do to help with this process:

Pick solid tools/methods: When possible, use quality measurement tools to minimize the variability due to error (this could be a high-end technology option, but does not have to be. There are valid/reliable low-tech options for many things we want to measure).

Pick a solid testing protocol: If you are going to compare results across golfers or over time, carefully consider how you are going to standardize the testing procedures. The more consistent the testing, the less potential noise you introduce. This could include the same tools/methods, time of day, pre-testing warm-up, and the testing environment (music playing? other people watching or framed as a competition?).

Interpret the data effectively: Regardless of how much variability there is, you need effective ways of interpreting the results. When I am interpreting golf-related data, I usually try to: 1) account for the variability of the measurement (how much does it typically vary swing-to-swing, or round-to-round), and 2) think in terms of patterns vs individual shots/rounds (it can be hard to make good decisions off the result of one swing, but when your overall patterns and tendencies change that can be much more insightful).

Question 3: How Valid/Accurate is Your Method?

We’re in an era of rapid technological innovation. New products and devices are launching every week. Data availability is no longer an issue. But just because something provides data does not mean that data is accurate/valid.

You do not always need to use the most expensive/research grade tech possible. There is always a balance between accuracy and constraints (e.g., cost, time, expertise required to use it, etc.). But you should have a good understanding of how valid your tech/methods are and take this into account when interpreting the results.

Here’s a post summarizing a “Sports Technology Framework” that was published recently. I think something like this should be adopted and used within golf. It’s not about categorizing products or tech as “good” or “bad.” It’s about understanding what each brings to the table, potential limitations, and the characteristics that are most important for your setting or the specific problem/use-case.

Sports Technology Framework Post

This can be a tricky task if you’re not well versed in the how the data/tech works. And this is often an area where I am contacted about providing help for facilities. But I would recommend that everyone try to have a natural level of skepticism when approached with new tech. And acknowledging that sometimes you need maximal f precision, while other times “good enough is good enough” if you know this going in and interpret the results accordingly.

Question 4: What Constitutes a Real or Meaningful Change

If you’re going to use data to help inform what you do and the decisions you make, you need to establish what constitutes a meaningful change. This is both determined by the error/noisiness of the data itself as well as the specific context of the data being collected.

I generally think there are three big things to determine when looking at how a data point changes.

Is the change “real” or just noise? This goes back to understanding that the more variable a measure is, the larger the change required to be confident you made an actual change.

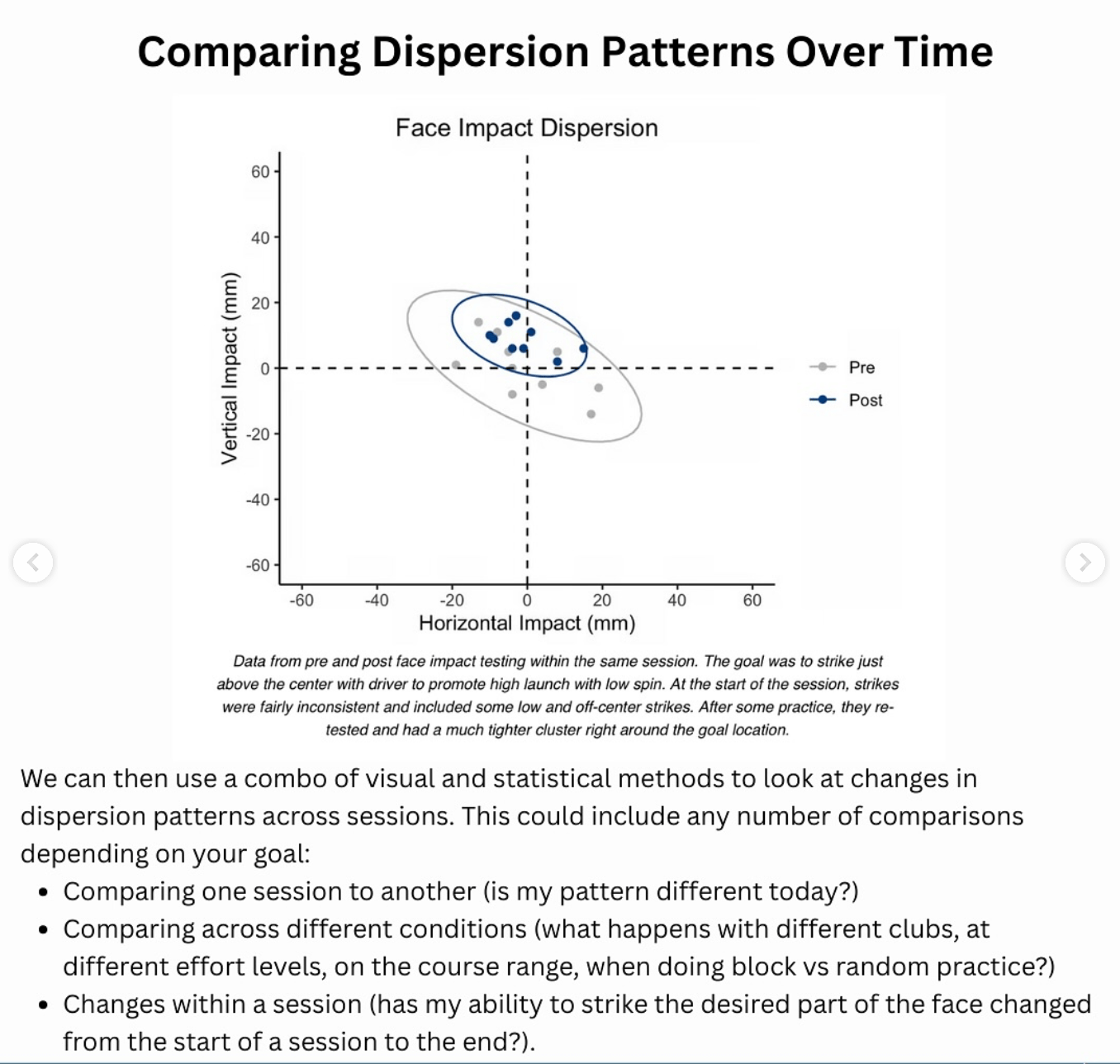

Thinking in terms of patterns/dispersions vs isolated data points: golf improvement is often about changing patterns and tendencies across numerous shots. For example, if you felt the need to make a change any time you mishit a shot, you would be in an endless cycle of tinkering and changing. Instead, we can account for variability using strategies like looking at dispersion patterns (see example with face impact below).

Is the change practically meaningful: The above points address whether we think the data actually changed and whether the pattern as a whole changed. But we also need to consider whether that size of change is likely to be practically meaningful. For example, a 3% change could be impactful for certain measures, while being less impactful for others.

Here are some examples.

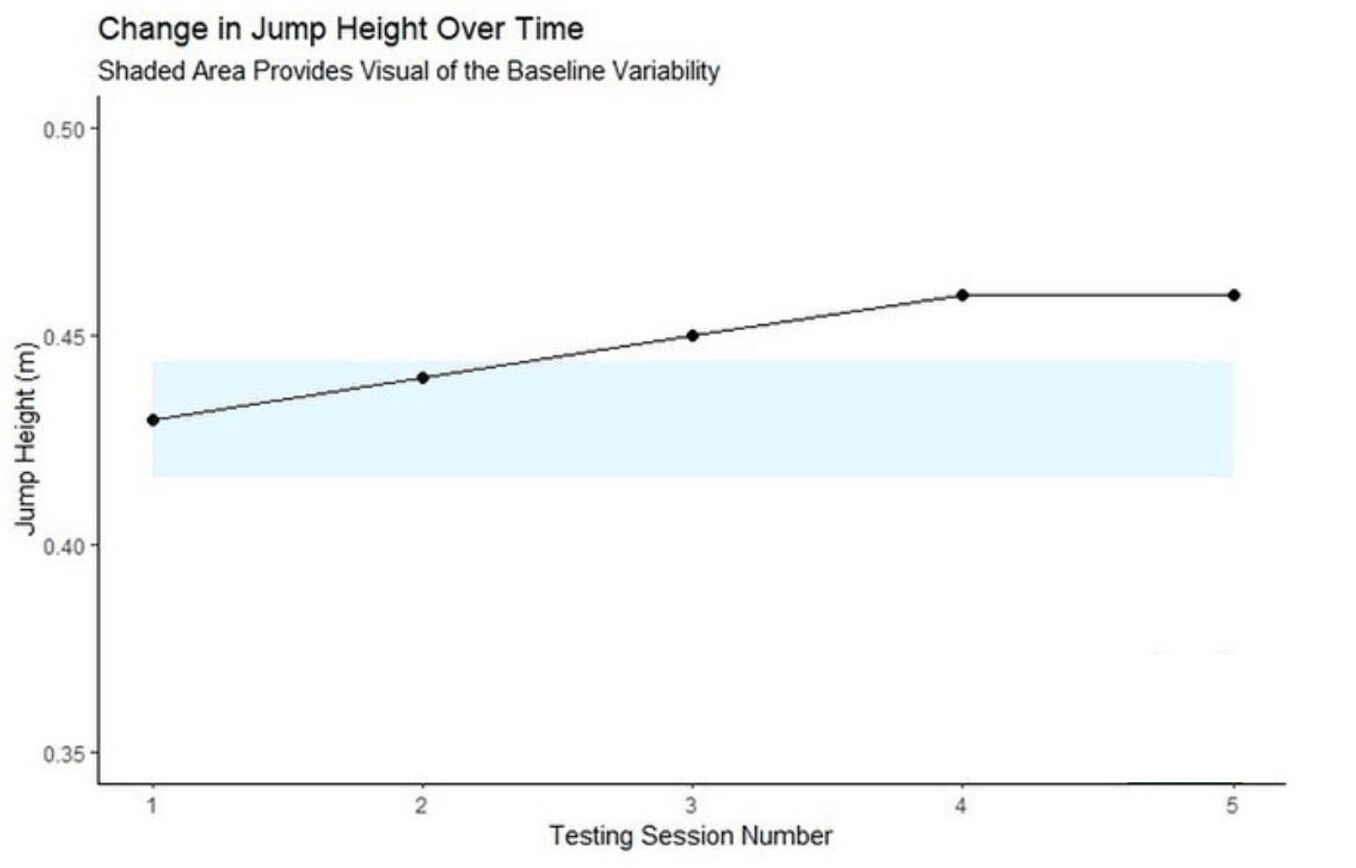

A simple example of using the standard deviation of jump height at baseline to set a threshold for what would be a “real change.” In this case, the blue shaded region is the first data point plus and minus the standard deviation of the test. The second data point increased, but not beyond the variability of the test, so I would interpret this a bit cautiously. But by the 3rd testing session, the increase is large enough to be more confident it is a “real change.”

From this post: https://www.linkedin.com/in/alex-ehlert-phd/recent-activity/images/

An example of creating a dispersion pattern from face impact data (where on the club face each shot is struck). I prefer dispersions in this case because it shows your overall patterns across multiple shots rather than just focusing on individual shots or the average position. There are then statistical methods you can use to see how much it changed, though even a visual inspection can be useful. Excerpt from this post: https://www.instagram.com/p/DIORxEBuHnu/?img_index=1

Question 5: How am I going to analyze the results?

Many people start from what data they can collect and then figure out how to analyze it or interpret it later. But I would recommend starting from which questions or problems you are trying to solve, and then determine the best data and analysis method to accomplish this. The way you intend to analyze data and the question you are trying to answer can drive the best data to collect (and how to collect it).

Question 6: How Should I Communicate the Findings?

Cool data-informed insights mean very little if you cannot communicate them effectively. This is also context-specific. Some people want nitty gritty details, others just want to know the basics and what they need to do. This is why I think technical expertise and access to data is only one small part of the toolkit. Having a strong understanding of the actual real-world problems and challenges that golfers and coaches face, and the way they prefer to operate can go a long way in terms of to make a positive impact.

WHILE YOU’RE HERE

SUBMIT REQUESTS, QUESTIONS, OR FEEDBACK

If you want to work with me, have questions, or recommendations for future content, follow the link below and submit a response. This link will be available on every issue of the newsletter, so you will have opportunities to do this in the future as well!